✨ STARLIGHT ✨ using ai to create generative metaverse worlds

written by joey zaza

I recently finished my six week residency as part of the Loop Art Critique (LAC). The application process involved submitting to an Open Call, and I was selected to take part by guest Juror Renata Janiszewska (https://www.renatajaniszewska.com).

Loop is a non-commercial venture initiated by Ariel Baron-Robbins (https://www.arielbaronrobbins.com), sustained through personal funding and a partnership with The MUD Foundation's exhibition Media Under Dystopia 3.0: WASD. Loop is hosted in Onland, a spatial internet environment created by the non-profit The MUD Foundation, focusing on Art & Technology.

The cohort that I was a part of was that 10th. Previous cohorts have been juried by Carla Gannis, Christiana Ine-Kimba Boyle, and Lorna Mills, and the 11th is already underway.

Because LAC operates within a virtual space, most artwork submissions are digital. However, physical artworks can be submitted with the understanding that they will be viewed as digital representations. The program is divided into two phases. In the first phase, participants engage in a six week residency featuring live critiques held every Monday and Wednesday from 12:30 to 3 PM ET. These sessions take place in a collaborative learning environment within the spatial internet environment of the metaverse.

The second phase involves participants curating a public showcase of their work, culminating in an online reception. Our exhibition is called ✨ STARLIGHT ✨, and will run from April 22 through May 29, 2024 (update: extended through July 21st!) and can be visited in the Loop Art Critique's Salon space https://verse.loop.onland.io/k8dzaEE/the-salon. An opening party/meet the artists will happen on Friday, April 26th from 1-3 PM ET. After May 29th, the exhibition will be removed and the next cohort's work will be shown.

Exhibiting artists in ✨ STARLIGHT ✨ are:

Cara DiStefano https://www.caradistefano.com

Chanika Svetvilas https://chanikasvetvilas.com

joey zaza digitalzaza.com

Keanu Hoi https://keanuhoi.art

Kylie Rah https://clutteredroom.cargo.site

I entered this critique looking to expand my practice into 3 (and more) dimensional spaces. I wanted to create work specifically for the connected multidimensional online space, while interrogating the metaverse concept, finding out what I agreed with about the concept, and taking note of the popular elements of the metaverse that I disagree with.

In general I believe the most interesting applications of the metaverse to be dimensional, gestural, and connected. The metaverse can have many dimensions, much more than three, and building for those multidimensional spaces can allow for work that is incredibly rich in its information and experience.

The gestural element of the metaverse is the critical area of relation between the human and the machine. For all of the input and output possible in such technical spaces, the gesture, the movement of the fingers and hands, is the one that is most engaged, and most built for. Keys, controllers, and mouses allow for very subtle and tiny movements to be translated into large and powerful outcomes. Subtly is key. Its exploration is an important one, and is often disregarded. In understanding the power of a system, you must understand its subtle gestures.

The connectivity of the spatial spaces is an important component. Software that is built and used by one person takes on another level of power when a second person, or a fifth, or hundredth, or m/billionth person joins. Spaces and artworks take on new meaning and application when multiple people can come together and share input/output such as spatialized audio, uploading files, chat, and so on.

You could write a hundred pages with ease about the difference between the dial up phone and the metaverse. And another hundred pages on the differences between the initial metaverse explorations of the 1950s (such as Morton Heilig's Sensorama) and those that we are seeing today. A lot of what you could imagine from digital art was done in the 1950s and 60s, but differences in the amount of people using the technologies, the cheapness and power of the hardware, the openness of the software, and the interconnectedness are allowing for novel exploration of concepts such as connected multidimensional generative spatial web environments that incorporate artificial intelligence in their creation and display.

The initial ideas for this work started as an exploration of creating 3 dimensional structures, mostly in glb format, as it is a common file format that is used in creating 3d structures, importing them into a variety of environments such as metaverse spaces, and selling of the 3d asset on nft marketplaces. One of the common ways to create glbs is the JavaScript 3D Library Three.js. Three.js allows you to create simple to complex 3 dimensional structures from code, which can be saved as glbs. Creating glbs from JavaScript is attractive, as it can plug into most technical stacks without much accommodation.

In choosing a starting shape, I opted for the sphere, and embraced it's shape throughout the critique. Some of the initial exploration was into the transparency of the structures. Things like lighting were thought about. Ideally I would have liked to add animation into the glbs, as the file format allows for animation, but the exploration did not end up in that direction.

Much of my current process makes use of artificial intelligence techniques and tools, so that continued in this critique. While starting out and considering initial forms for my sculptural works, I would take screenshots of 3d models that I made with Three.js, and use the images in the img2img tool of the Stable Diffusion model in the EmProps platform.

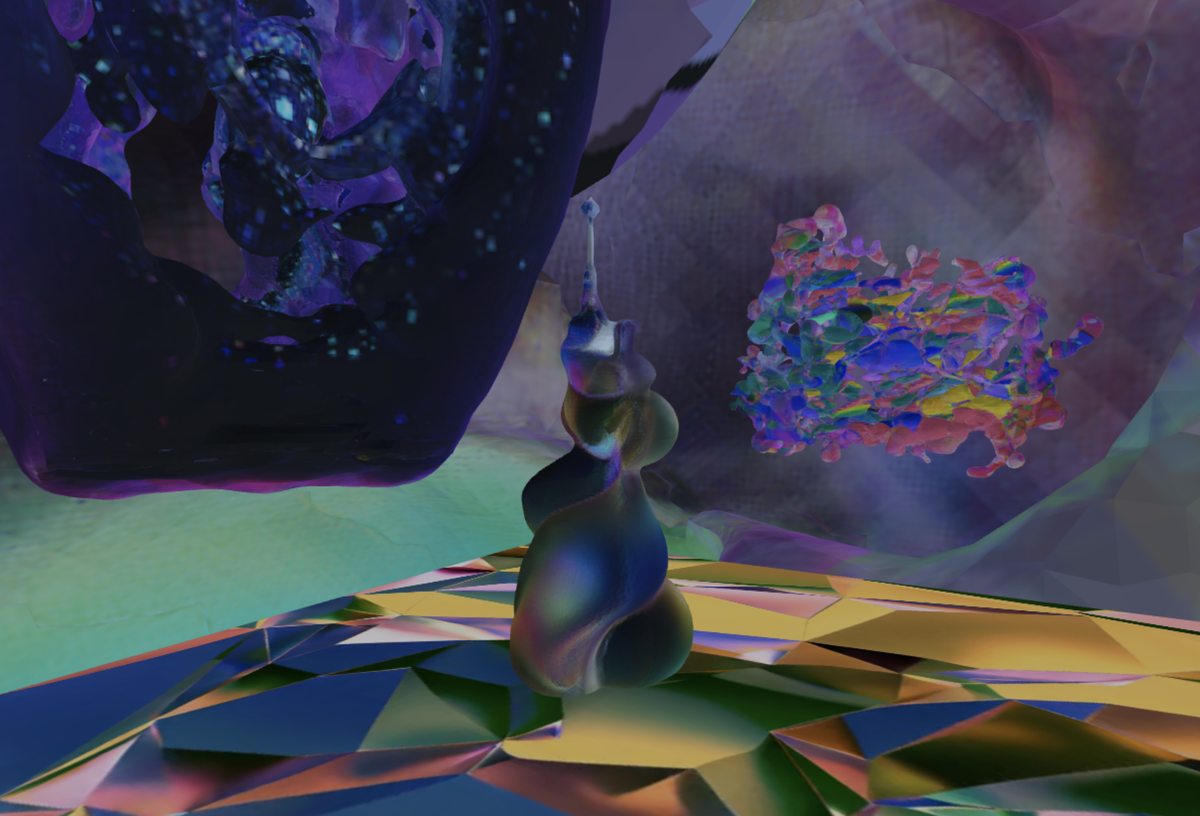

I was quite happy with some of these outputs. One of the questions heading into this critique is what the final output would be. I was imaging generative metaverse spaces, but the success of these 2 dimensional outputs were making a case for being the final output.

And another image, because if you can make an infinite amount, you should probably include at least a few.

In a search for image to glb models I came across DreamGaussian: Generative Gaussian Splatting for Efficient 3D Content Creation by Jiaxiang Tang, Jiawei Ren, Hang Zhou, Ziwei Liu, and Gang Zeng (https://github.com/dreamgaussian/dreamgaussian). I've seen models like this in the past, but this was my first time using them. DreamGaussian provides a way to convert images to 3 dimensional glb files.

The prior glb has been made available as an NFT on the Tezos blockchain and can be seen at the following link: https://objkt.com/tokens/KT1JjtAmB6FdcxaWvo4miFQR9Z3KugJMJy2G/2

DreamGaussian also provides a way for creating glbs from text prompts, although I did not explore that much in this critique. In using the model I was able to create a variety of glbs. I like some of the conversions and the model can produce interesting outputs, such as the following that incorporates an image that I took with a microscope into the creative pipeline. The photographic and artificial intelligence processes are sometimes looked down on in the world of generative art, but I strongly recommend generative artists take deep dives into both fields to help better inform their artistic practice, and to move their works beyond the two dimensional.

During this critique the objkt4objkt event took place. It's an event that happens from time to time on the objkt.com platform where artists come together to mint and collect work on the Tezos blockchain. As part of this event I ended up minting a 2d gif that used a generative image fed into Stable Diffusion. This work was incorporated into my metaverse, to show an example of moving 2d work in a 3d space. The work can be seen at this link: https://objkt.com/tokens/KT1JjtAmB6FdcxaWvo4miFQR9Z3KugJMJy2G/1

The glbs and gif were assembled using the XR Creator tool in the Loop Art Critique platform. A direct link to the space that I created can be seen at https://verse.loop.onland.io/AwidCtW/zazaverse. You can move around in this space and explore it like you would any spatial web space.

It would be nice to take this current process, and automate it so that generative metaverse environments could be created. The flow would be as follows:

✨ Create interesting 2 or 3 dimensional structures in Three.js (this code can be written by ai)

✨ Send images of these structures to Stable Diffusion to alter via img2img

✨ Run the images through DreamGaussian to create glbs

✨ Resize and position glbs using Three.js

✨ Mint the generative metaverse worlds on a platform that allows for these creative flows

This would allow for a process in which generative metaverse worlds can be created. While the current process I am using is manual, all of the prior mentioned steps can be automated, although not on current generative platforms, as there is no way to access the backend or cloud based technologies needed. These metaverse worlds can have an infinite variety, an infinite amount of dimensions and space, with infinite resolution. These sort of mediums and media have been considered previously, but important discoveries will come from thinking through this creative and technical space.

One thing that I like to incorporate into work is to create works or worlds that can be explored. The work itself can be a creative space or material. It can be positioned, screenshotted, and compositions can be built from it. These outputs and ideas can then go back into models such as Stable Diffusion of DreamGaussian, and the creative process can continue.

Slow movements in the metaverse can allow you to enter and unlock worlds that are not initially visible. The world that you see may not be the one that you are operating in. Zooming in or out of the traditional three dimensional model can provide changes in perspective, while allowing you to access areas that look smaller, but can be infinite in depth. Dimensions in the metaverse space can be created on scales larger than the current physical world. Rules that can be coded in software, and control movement, interface, and perception are creatively and physically unbounded. Various softwares can plug into the process at any point. It's all data, and it can all be manipulated. Sound can be spatial. Movement and animation techniques can be integrated.

How should you explore infinite spaces?

How do you curate those spaces?

What sits in them?

Conceptually, the world, work, or space itself exists, regardless of whether it has been coded, regardless of whether it can be seen, regardless of whether it has been imagined. The idea can be the art. The thought can be the art. The world is there, whether you think of it or not. Whether you see it or not. Whether you understand it or not.

Software allows you to look into those worlds, that are outside of the realm of the understood, visible, or imagined.

It provides a portal to access the never before seen.

It allows you to unlock things that the software developer cannot expect. The system can be incredibly complicated, and its outputs can be infinite. There is no way a human can look through these systems manually. The amount of time required to do so is infinite. You can automate the creation, exploration, and curation of these worlds, but it is a battle of infinite creation against infinite curation. It has no end.

The technologies and outputs that are being explored in the art and creative spaces are world changing. The art and creative spaces are uncovering some of the most important technical, cultural, and financial systems and processes. These changes will radically shift the way the world operates. While most of the world unawarely carries on its typical path, fundamental changes have occurred. It will take years before people realize the changes and the magnitude of what has taken place in this technical, creative, and financial awakening.

You can see the ✨️ STARLIGHT ✨️ exhibition in the Loop Art Critique Salon at the following link through May 29, 2024 https://verse.loop.onland.io/k8dzaEE/the-salon

You can go directly to the zazaverse and see the work that I have written about here: https://verse.loop.onland.io/AwidCtW/zazaverse

A half hour interview between Ariel Baron-Robbins and myself is available below:

The avatar from this interview was minted from Sky Goodman's Avatars of 2027 series on EmProps (#36), that I fed into DreamGaussian to create a glb. https://emprops.ai/tokens/emprops/aa691b79-7c26-4fa9-b79c-cb4dc53c3f56

If you are interested to take part in the Loop Art Critique, there usually is an open call on the website at https://loop.onland.io. There is a current call open with a deadline of May 20th, 2024, with jury by Cassandra Herzberg. You can apply via the following link: https://docs.google.com/forms/d/e/1FAIpQLSdjhcAlwNvaoMPz2PVyL9DdmS5kcR6QqnFVPN9iB-KRQei8ag/viewform

Can you see the ✨️ STARLIGHT ✨️? Can you access it? The glimmering, glistening, shining, shimmering light, the visible light, the invisible light, the electromagnetic radiation that can produce a (visual) sensation.