serendipity vs consequence

written by fraguada

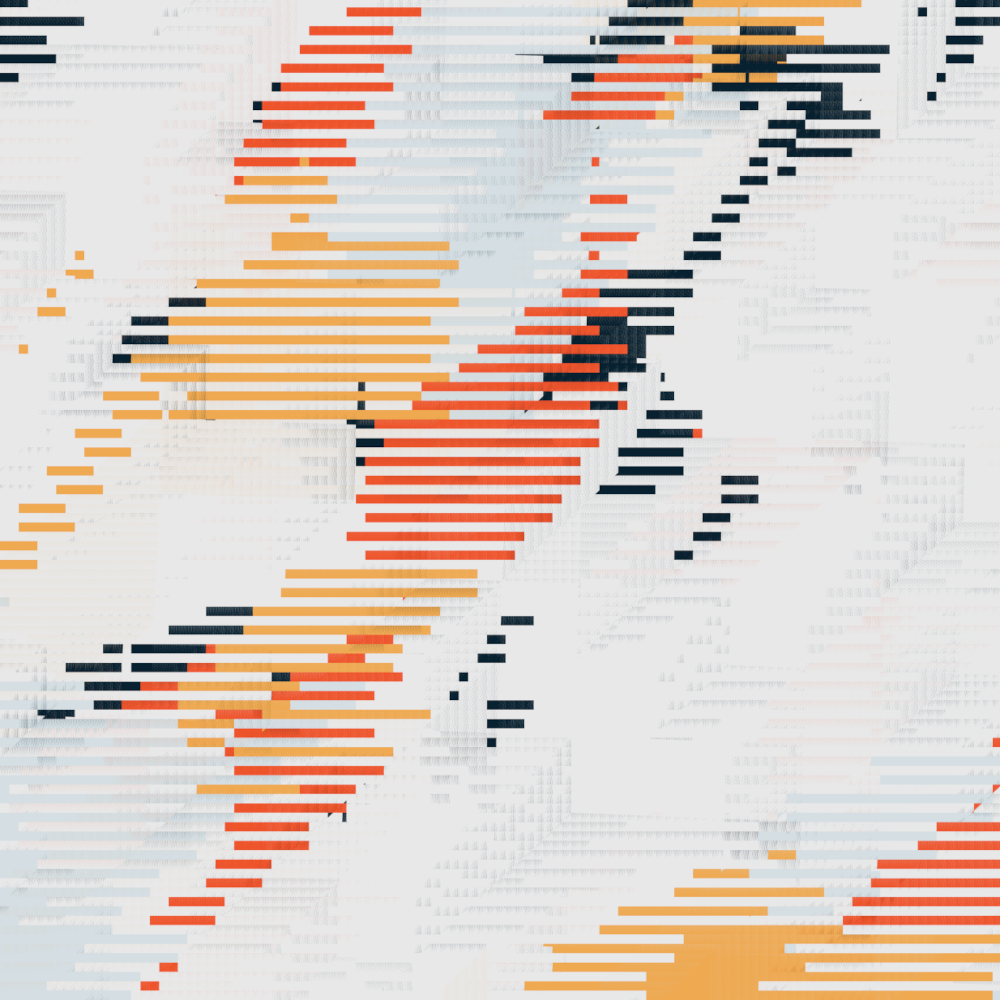

serendipity vs consequence is the result of deterministic algorithms which manifest differently depending on the device it is viewed upon.

project name project name project name

The work in the current generative art movement is based on deterministic algorithms. This means that given a set of input parameters, the result should be exactly the same. The code in this project is deterministic, it includes no conditions to adapt one way or the other to any particular hardware device, yet due to the varying graphic drivers across devices, this same code generates an entirely different experience. So while this work adheres to the rules of long-form generative art with it's deterministic algorithms, it is highly influenced by stochastic algorithms, where the result cannot be predicted.

In a way, this project is a critique of deterministic algorithms and the fetishising of a bounded solution space of carefully crafted quantized experiences, so pervasive in the current generative art zeitgeist.

This project didn't start out with this vision though. This project started as a simple exploration of z-fighting.

z-fighting

The basis for this project is a phenomenon called z-fighting, a common issue in 3d graphics when two or more objects overlap in 3d space thus causing an issue for the system to determine which is closer or farther from the viewpoint. If we consider reality to be a continuum including our physical and digital experiences, z-fighting is a strictly digital phenomenon as objects trying to occupy the same physical space usually have tragic or intimate consequences.

For more information on z-fighting, check out this wikipedia page: https://en.wikipedia.org/wiki/Z-fighting

My background is in architecture (buildings) so throughout my education and practice I worked a lot with 3d models and different 3d modeling software. While I mostly had access to computers with Windows or macOS, I occasionally would use something Linux based. What this revealed to me is that the same 3d model in the same software might look sightly different due to how the graphics hardware of the computer would render it. The models looked different, and so did the modeling errors!

At some point I began to explore these "errors" as an artistic technique.

The intention of this work was to sit down and formalize a process utilizing z-fighting to generate unique results. For this I knew I needed to work in a 3d environment, but I wanted to keep it the geometry simple. To force this simplicity I started to explore what I could create on my phone in the three.js editor.

At some point in this project I paused and tested the code on fxhash. What I noticed is that the preview generated by the fxhash servers looked different than what I was seeing on my computer. At that point what was going to be a relatively simple project changed into a multi server obsession.

audio

Once I was able to reliably use z-fighting across different systems, I began to wonder if I could generate audio from the generated visuals. Since the visual results would differ from computer to computer, so would the audio if it were to be based on the visual.

I had attempted generative audio several times before, but was never satisfied with my approach or the result. This was mostly due to my lack of understanding all the nuances of web audio and tone.js. This time I wanted to see if simply mapping prominent colors on the canvas to notes. At first, the results were interesting, but still feeling quite random. I thought to push it further and limit the notes to specific scales. These results were much more interesting and started to sound like something I wouldn't mind listening to. HSL color values are mapped to notes, volume, and distortion.

During development I would print the colors to the browser dev console to understand the notes. I liked this so much that I kept it. You can see the generative score by opening your browser dev console while viewing this work.

crisis

So deterministic algorithm generating a compelling animated artwork on one hand, interesting generative audio on the other. But there was a problem. Remember the screenshot of the fxhash preview? Due to how the project renders on the fxhash preview server I'd be at the mercy of the preview and just hope people would notice that things look different on their devices. Could I do anything to show people how the same iteration looks on different devices they might not have access to? Yes, yes I could. But it would increase the complexity of the project considerably. Enter the back-end.

the back-end

The back-end to serendipity vs consequence allows me to render the project on different devices and post the results on a website for people to navigate the variations in each iteration. This is an early sketch:

The back-end consists of the following modules:

- svsc-listen - a nodejs script that listens to a specific fxhash gentk id and any modifications that might occur, for example, someone mints a new iteration. This script compares any new iterations minted to those already being tracked. When there are new iterations this script triggers the a render workflow on GitHub.

- GitHub svsc repository - this repository includes a workflow file to render the newly minted iterations on two (at the time of writing) self-hosted runners located in my house. These are my primary dev machines and I know very well what the results look like. This workflow pushes the results to a webserver.

- svsc-enum - a nodejs script that listens for changes to the file system and enumerates the media directory where all of the rendered results are stored. This generates a file for website to know from where it should source the media.

- svsc-www - an html/css/js site for navigating the results

The results can be explored at https://fraguada.net/svsc. Note, the videos are not optimized for the web, so they can take quite some time to load. Also, the iterations shown before the opening of the project are from testing the project.

tbc...