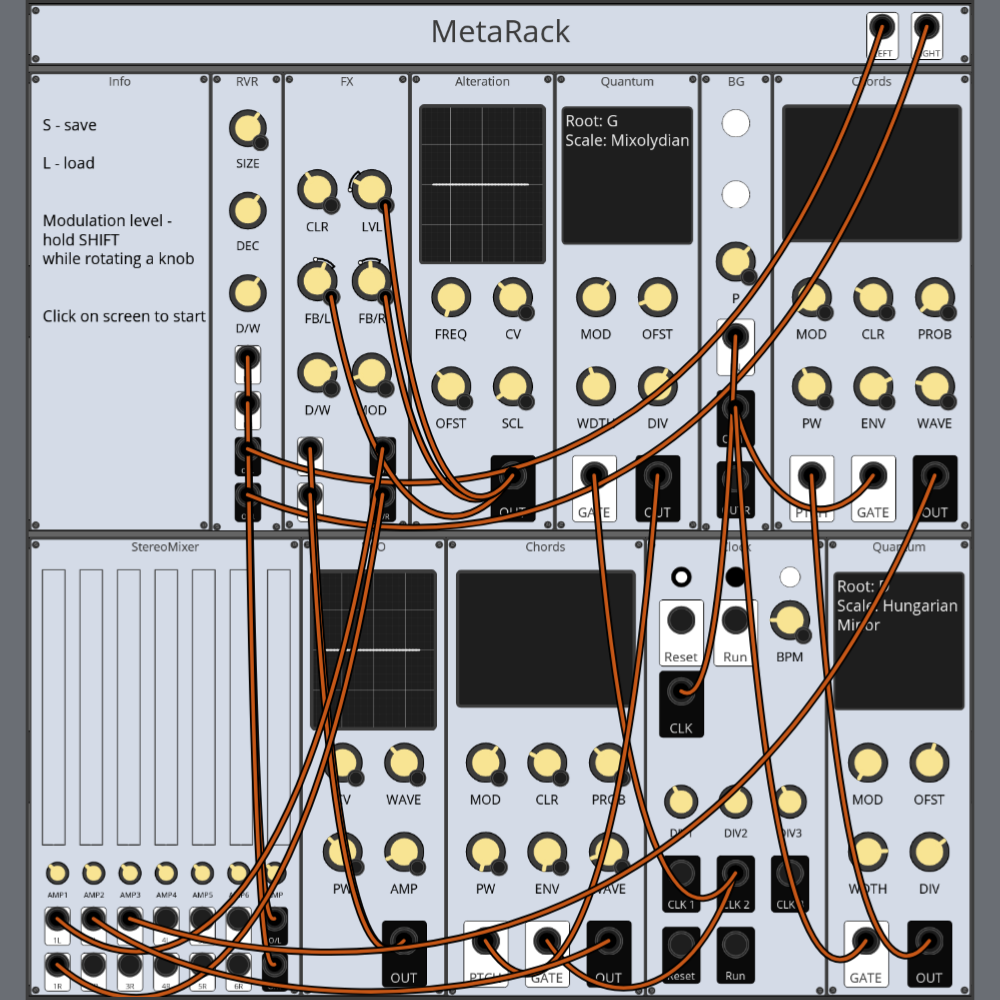

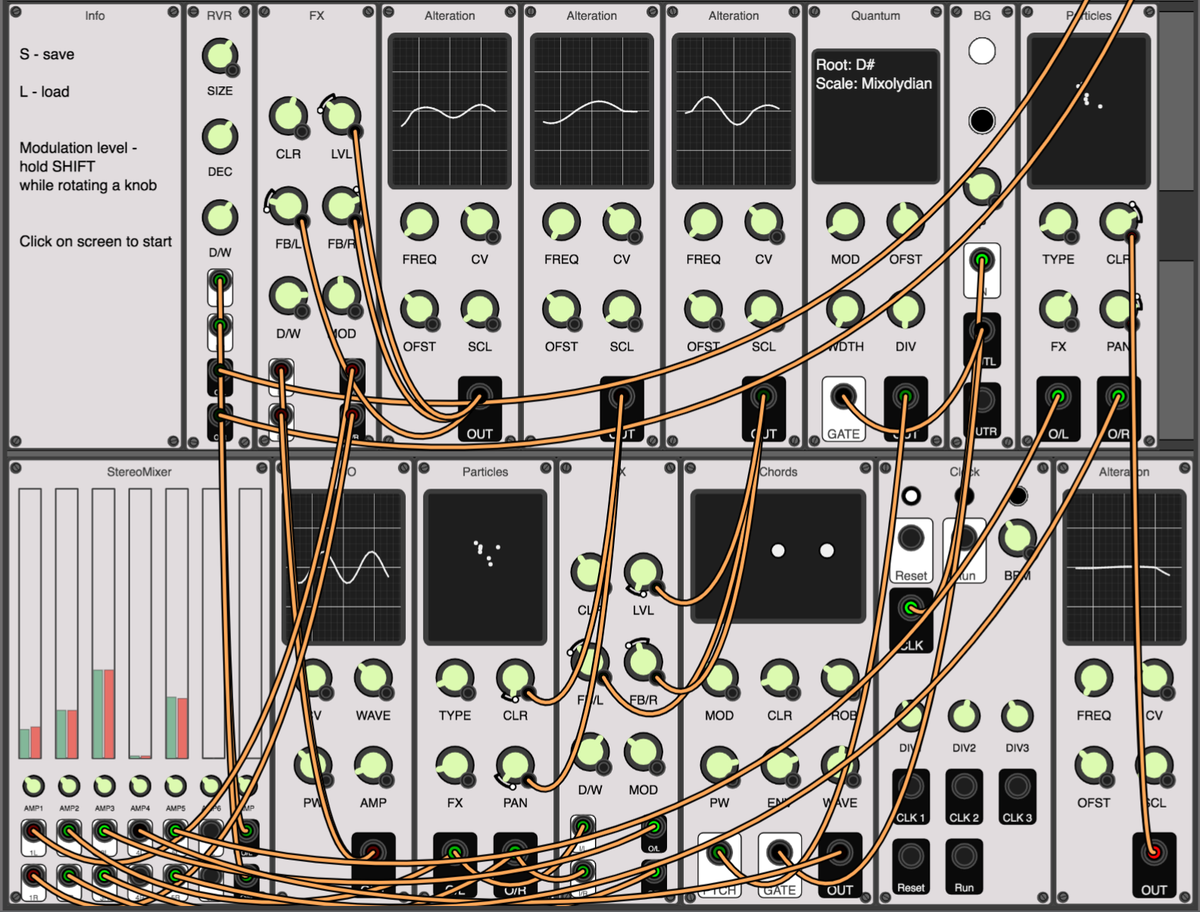

MetaRack modular synthesizer

written by ferluht

Intro

Modular synthesizers are well-known and powerful tools for music creation. The basic concept they exploit is that by being connected together and modulated by each other, many tiny audio modules can do very complex things that cannot be done using other techniques. How people deal with modular synths is very similar to NFTs: they make unique modules, collect them, trade, and play.

We are huge fans of modular synths and generative music, so obviously, one of the first NFT arts that we made was a synthesizer. Half a year after the release of Ambient Landscapes Synthesizer we are ready to announce its successor - MetaRack. MetaRack is a generative modular synthesizer capable of producing random yet nice sounding patches. MetaRack is written from scratch, with pure sample-by-sample processing and no 3rd party libraries were used for audio handling. Also no samples or prepared audio files were used in this project, everything you hear is generated on-the-fly. The only library involved in MetaRack is p5js which is used for drawing graphical elements.

How it works

project name project name project name

In computing, the sound is represented by a sequence of voltage values, sampled with some rate a.k.a sampling frequency. To produce sound one needs to implement discrete algorithms capable of processing those sequences of samples. This field of knowledge is also known as DSP - digital signal processing. In our system we implemented plenty of small DSP blocks, such as oscillators, filters, sequencers, noise generators, etc. Sample-by-sample they read inputs, process and propagate further virtual voltage values, which then form the sound wave.

Each module implements several basic primitives, such as: "process" method, "draw" method and unified i/o ports. The "process" method is called 44.1k times per second and each time it's called it processes the next sample of the audio signal using those elementary DSP blocks mentioned above. The drawing call is managed by the p5 draw loop, however it implements some additional image buffer magic to reduce computation costs. The standard of communication is the same across all the modules, so one can start a wire from any output "O" and insert it into any input "I" in the patch which will result in some modulation of the parameter "I" by the signal coming from "O".

Randomness

There are several ways MetaRack exploits randomness inside fxhash.

- External module randomness (knobs positions)

- Internal module randomness (variation of internal module structure)

- Patch randomness (how modules are connected)

External randomness

The first stage of sound randomization is knob randomization. Every graphically accessible module parameter could be randomized within its range, it is a pretty simple concept that was implemented first.

Internal randomness

The scripting nature of JS also gives us the ability to dynamically arrange multiple modules into bigger entities. Each module in the MetaRack patch is programmatically composed of 5-20 smaller modules (VCO, LFO, filters, etc.) which are hidden "under" the top panel. However, all those modules use the same API and each of them could be placed into the patch as a separate one drawing its own frontend. This stage is affected by chaos as well. As an analogy one may think of it as if it were resistors on a PCB which values differ from synth to synth.

Patch randomness

The final stage of randomization is the choice of which modules will be presented in the patch and how they will be connected. There are 3 functional types of modules in MetaRack: Melodic modules, Noisy modules, and Effects. To maintain the overall harmony each top-level module can be connected only with a limited number of endpoints of other modules, depending on its functional type. This functional classification helps to save the mix while adding more chaos to the final sound.

Roadmap

MetaRack token could be thought of as a Manifesto of a new type of NFTs, as a start of a big journey to the paradigm of chainable interactive artworks. So let us briefly introduce our plans for the near future.

Starting from the middle of May we were working on a kind of a gallery (or a subplatform), based on fxhash ecosystem and allowing collectors to connect and arrange their tokens, probably producing derivative NFTs. We plan to open our programming API for 3rd party developers in scope of a couple of months (Q4 2022), so anyone will be able to create tokens compatible with our gallery. A lot may change but the core planned and proven features are: a standard of intertoken communication, support of any 3rd party JS framework, C++ support (especially useful for audio programming). We hope, the gallery will be launched during the next couple of months, but you know, it's not guaranteed for sure.

The other side of the project is an open source web modular audiovisual synth engine, which is also on the way to being opened to the world. While keeping crypto in mind it's targeting a much wider audience, including musicians, audio and visual programmers who may not want to join the NFT community but want to express themselves through novel technologies. It has many advantages over traditional solutions such as VSTs and PD mainly because of its programmatic flexibility and visual possibilities. We hope that it will help to transfer the value from crypto to the real world.

Stay tuned!

@ferluht @alexexeev