Lost Memories - Painting like an impressionist android

written by MarkLudgatex

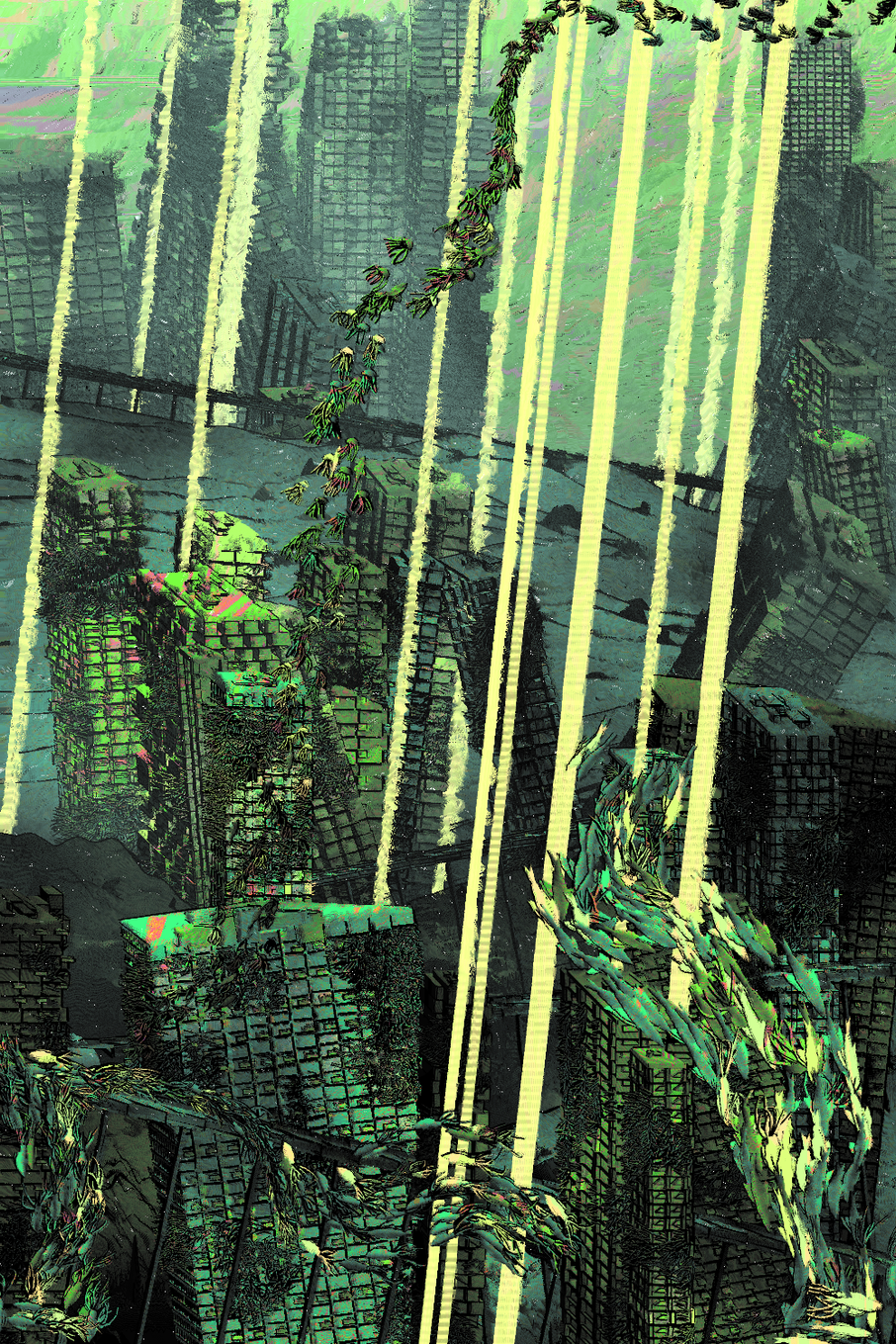

UPDATE: This piece went through some render issues before the initial launch so is now REMASTERED. I decided during solving the render issues to make large improvements to the detail and style. So some of the imagery in this article is from the older version. Thank!

Some personal meaning…

Feel free to skip this part, though I'll try to keep it brief, fun, and unpretentious.

Although not meant to be overtly political, the piece obviously plays with strong concepts under the aesthetic… A huge advanced metropolis completely underwater, destroyed by that submersion, and aged by nature. Though there are signs of life, and possibly even some structures intact. There’s no specific time period for the setting of this piece. It could as easily be a city from the past as an event from our future.

I’ll put aside any political tones for now and focus on a more lighthearted perspective about the meaning behind this piece. There’s something deeply fascinating about the power of nature. Almost unfathomable in its potential scale. We do have experiences with it, in storms, floods, lightning etc. But these are just small twitches compared to what’s really possible.

Life has mostly been the same for humans for so long, we can’t really conceive just how stable things are right now compared to what a shift in the universe ( or let’s say on a planetary scale ) looks like. We’re just spectacularly arranged dust that would be blown away so easily on the slightest breeze at the scale of the universe.

Anyway, I really don’t mean for all this to be a bummer. This project is really about making something cool about a fascinating concept. And the fact that we’re so cosmically tiny is kind of fun and exciting. There’s so much more going on than normal daily life and maybe our personal problems aren’t such a big deal in an infinitely powerful universe. There’s an ocean of possibility you could say.

project name project name project name

project name project name project name

Painting like an impressionist android

While I was making “The Spectrum of Nature” I had two ideas for a future piece. The first was an underwater scene and the second was seeing how paint like I could make some code. It seemed pretty clear that these two ideas would work well together as the aesthetic of an underwater scene would need to be rough due to nature's impact on the man made world. Very quickly all the clean perfection is obliterated into organic chaos. A paint-like style would be well suited to create this roughness.

I decided this would be my next project as a fun departure from the “above land” landscape settings of my last two projects.

Even though this piece was never meant to be fully impressionist, I use that word since it seemed that to get a really good paint effect, the code would have to be able to create something impressionist. If this could work I could then dial back the settings to the paint effect I wanted.

In the next section I mention some math, but don’t worry, this will not get ( that ) heavy. I promise ( and I hope )!

There are probably many ways you can make a paint-like image from code, but to me what made sense was to think like a painter, or really the paint brush of a painter. It seemed paint brush movement could be broken down into the two main elements found in “vector math” in physics. Direction and force. It made sense to think about the movement of a brush in the same way as objects moving through space are considered in physics. The brush after all moves through space. It has a direction, but it will also vary in force. The force could be considered as how far the brush moves for that particular stroke. And the direction would just be the angle.

It seemed that making a paint-like image would be best done by moving pixels (the small dots that make up an image), as it would need that level of detail to look as realistic as possible. The alternative would be to use the “3D triangles” that are used to make objects in webgl (a thing that makes 3D graphics on the web).

As I'm using webgl and shaders ( a shader takes the triangles of the 3D object and turns them into pixels ), I decided to push my shader code ability and do the paint movement moving pixels in the shader. This has the benefit of being much faster than in a normal javascript loop.

The first major hurdle was that a paint brush creates a stroke over time. The stroke of a paint brush is really lots of little moves that keep changing direction to make a curve ( see image below ). So that means lots of little vectors as 1 vector doesn’t move in a curve, it connects two points in space. So really there are three components that make the paint brush move: direction, force, and time.

The change in time meant that I needed to update the image over time. A full paint effect would mean running the code on the image multiple times to keep moving the paint over one pixel at a time. This can be imagined as the paint brush moving and every tenth of a second is a new pixel moved with the brush.

Actually the amount the paint moves each time is dictated by the force of the paint. So if the force was 1 the paint would move 1 pixel and if it was 3 it would move 3 pixels. Well, that’s a very basic idea of how it works anyway.

Sorry, got off track, let’s get back to the movement of a brush stroke. To be able to get a realistic paint effect, we’d need curves. As a brush stroke is never perfectly straight. And a curve means the force ( the brush ) must change direction at some points. And a change in direction means lots of small moves, not one big one. Phew! Hopefully that makes sense. You may even understand the basics of “Integral calculus” ( fancy math thing that I don't fully understand ) after this.

Lots of small moves put together assumes that whatever is moving the pixels knows what the pixels next to it are. If it needs to move a pixel ( to update the curve ) from 1 pixel to another, it needs to be able to read the pixel it’s taking from, and write to where it wants to put it. The problem with shaders is that they can’t actually see the pixel next to them. This is because when the shader code runs it runs all the pixels at once so when it does something on a pixel it doesn’t know yet what the pixel next to it will be like.

To get around this problem I had to save the image as a kind of “map” after running the paint code. By a map I just mean a saved image of all the pixels, that can be used as a “map” by the code to know where the pixels are relative to each other [ I hope these sentences are as fun to read as they are to write ]. Once the “map” is made I could pull the updated “map image” back into the shader and run it again, using the map to tell the pixels what was next to them.

This solved the “time” aspect. It meant that the movement of the paint was made up of lots of small moves all combined, with each move being based on what came before. You can see this happening as the piece loads. I deliberately made it update as it goes along as this means elements at the back ( the furthest distance through murky water ) have received the most paint moves.

So we’ve done time and force. All that’s left is direction. The direction for the paint was chosen by a mixture of noise functions, of which the basic layer was perlin noise. Most noise functions run from 0 - 1, so this was scaled to 360 to choose the angle of direction. All that’s needed then is a short bit of math to turn force (amount of pixels to move), and angle, into a new pixel position. This new pixel position gets updated with the color of where the vector came from, and hey presto, paint has moved.

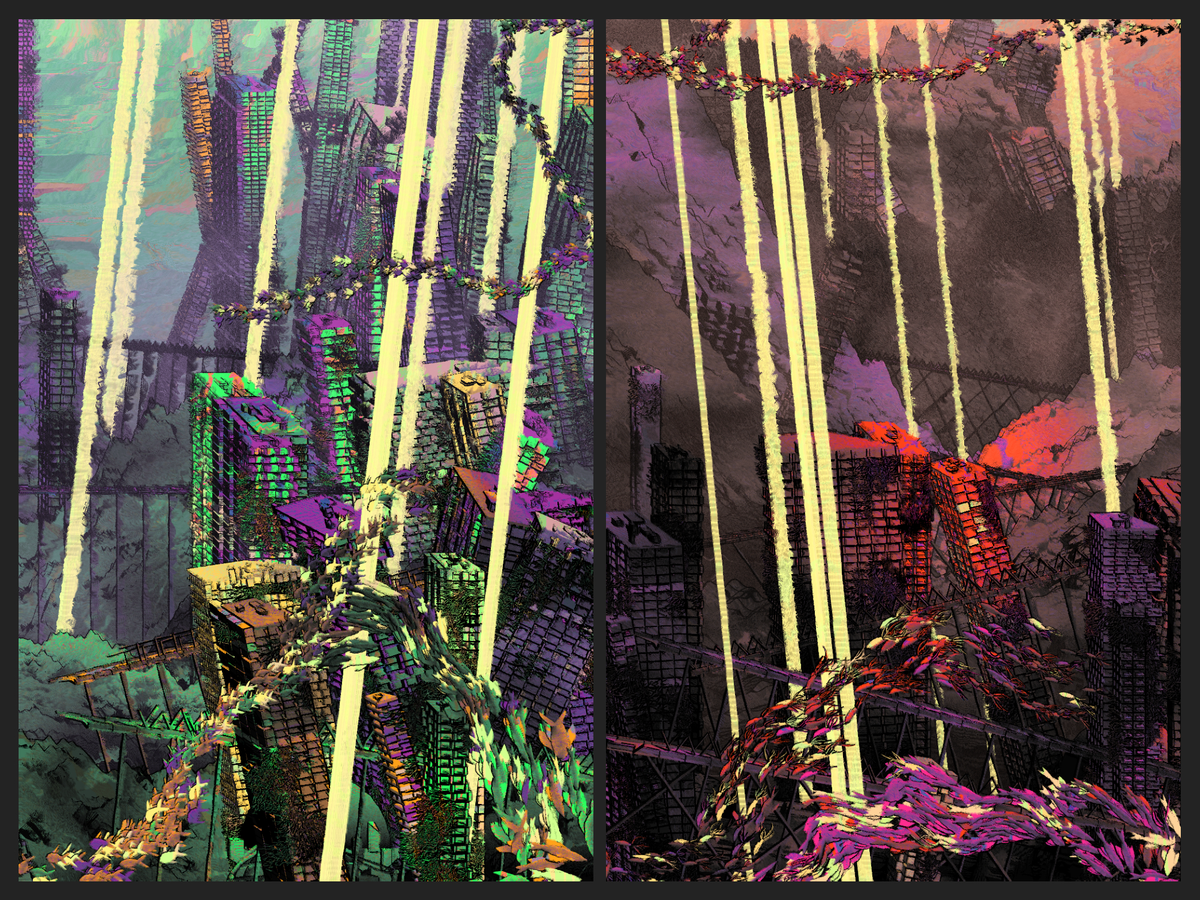

Below are some versions where the paint has been allowed to really go wild. Left is the original. Middle is with the paint amount increased (here there is still detail at the front as the paint amount drops as the scene moves to the front to leave detail in close elements). The right is where the paint has been allowed to just obliterate everything. Much more impressionist! In theory you could just keep increasing the settings and the paint effect will get more intense.

And that’s how I think an android would paint like an impressionist.

Special lighting for a hand drawn feel

As with “The Spectrum of Nature” lighting plays a key role in the visual. I won’t go too much in depth here as this is mostly covered in the “Spectrum” making of. However, let’s take a quick fun look at one of the darker scenes with and without lighting (see below). As you can see the atmosphere changed dramatically, but without seeing the initial drawing without lighting it’s less obvious just how crucial the lighting is.

Even though this is made using webgl ( a bit of 3D web stuff ) there is no “3D lighting” like you usually get in 3d environments to calculate the lighting for you. All the shapes are flat, so the lighting is calculated in a custom way based on the elements in the scene. This was deliberate to get the hand drawn type feel that I wanted. There are 3 lighting scenarios dependent on the depth of the sunken city that vary in darkness.

Below is with the lighting on and off. On the left is the normal ( darkest ) lighting setting. On the right is with all lighting ( shadows ) turned off. Quite a difference!

Awareness of the experience of the collector

Again the speed of drawing was a big factor in the work spent on this. The speed it can draw at impacts two important factors.

First is the collector experience. I work hard for the image to be enjoyable from the moment the page opens. This means minimal black screen time and minimal waiting between elements drawn on screen. There’s a lot of extra work to improve this, but an art piece is all about experience, so I make sure any possibility to improve the experience of the piece is maxed out.

An example of optimisation for speed would be how the buildings only start drawing vertically from where they can be seen. So when the ground elements are created the lowest y position of the ground on the canvas is tracked and linked to where it is on the z-axis. The z-axis is the axis running from the back of the screen outwards towards the front, and is used to decide which order the layers are in from front to back.

When a building is created it uses the tracked y positions of the ground to find the next ground in front of it and starts drawing from that point as the ground will cover the rest anyway (if they started at the bottom of the screen for example). The buildings have a very high level of detail, so this extra function to track the starting point made a huge saving on processing power and time to render. This method isn’t perfect, the very random nature of the ground elements and the buildings leaning mean that there are occasionally buildings that get covered by ground. However it’s a small price of overall performance.

Below you can see the buildings at the back stop at the red line, where the ground meets them. The ground also stops where the ground in front will cover it. At the yellow line, the new layer of buildings stop there, as below that they will be covered by ground.

The second benefit of fast drawing is the possibility for more detail. Processing power saved can now be put into creating more elements. This again raises the experience of the piece. So in the end you have a kind of multiplier from it “collector not waiting experience” x “more detail experience” = much better experience.

At the deepest part, the ending…

Thanks so much for reading this article! I really appreciate the interest in my work!