g-scapes: using ai to make generative animations and sound

written by joey zaza

For my previous release, e-scapes, I explored how artificial intelligence could be used in the creative pipeline. e-scapes explored combining p5.js, a technology commonly used to create generative art, with the text to image technology of Stable Diffusion. The traditional image manipulation software Lightroom was also included in that process. A more in-depth write-up on the experience can be seen at the following fxtext article: https://www.fxhash.xyz/article/e-scapes%3A-generative-ai-artworks-on-ethereum

g-scapes is a series that explores similar themes, but instead is written in web technologies like JavaScript. In general I enjoy work that moves and makes sound, so while I was happy with the still images produced by Stable Diffusion, I am happy to return to technologies that allow for sound and movement.

I wanted to continue to use ai in the creation of this work, so I explored prompting ChatGPT to create code for this artwork.

For example, in order to create the sound for this artwork, I used a prompt as follows:

Write a thorough HTML Audio and javascript codebase with many classes and functions that can create a sound composition. The code should create a song that is capable of playing for an infinite length of time, and the sound should be constantly evolving and changing. The composition should include a variety of interesting sounds. The generative soundtracks should be well balanced, and include artistic and experimental sound. The HTML Audio and javascript codebase should be capable of generating a random never ending sound based composition every time the code is run, which is different from any other generation. So be sure to randomize all of the elements, such as timing, sounds, repetitions, gain, fading in and out, modulation, feedback, and other musical concepts to allow for maximum diversity of sound. Make sure not to use .mp3 files, and instead programmatically create the sounds using HTML Audio and javascript.

That prompt produced the following code, which was used almost exactly in the g-scapes project:

const piano = new Audio();

const pianoNoteFrequencies = [

261.63, 293.66, 329.63, 349.23, 392, 440, 493.88

]; // C, D, E, F, G, A, B frequencies

const drum1 = new Audio();

const drum2 = new Audio();

const drum3 = new Audio();

const bass = new Audio();

function playComposition() {

const intervalTime = 1000;

let pianoNoteIndex = 0;

let drumIndex = 0;

const timer = setInterval(function() {

piano.currentTime = 0;

piano.src = "data:audio/wav;base64," + generatePianoNote();

piano.play();

if (drumIndex % 4 === 0) {

drum1.currentTime = 0;

drum1.src = "data:audio/wav;base64," + generateDrum1();

drum1.play();

}

if (drumIndex % 8 === 0) {

drum2.currentTime = 0;

drum2.src = "data:audio/wav;base64," + generateDrum2();

drum2.play();

}

if (drumIndex % 16 === 0) {

drum3.currentTime = 0;

drum3.src = "data:audio/wav;base64," + generateDrum3();

drum3.play();

}

bass.currentTime = 0;

bass.src = "data:audio/wav;base64," + generateBassNote();

bass.play();

pianoNoteIndex++;

drumIndex++;

}, intervalTime);

}

function generatePianoNote() {

const randomNoteIndex = Math.floor(Math.random() * pianoNoteFrequencies.length);

const duration = Math.random() * (4 - 0.5) + 0.5;

return generateWave(pianoNoteFrequencies[randomNoteIndex], duration);

}

function generateDrum1() {

const duration = 0.25;

return generateWave(200, duration);

}

function generateDrum2() {

const duration = 0.5;

return generateWave(150, duration);

}

function generateDrum3() {

const duration = 0.75;

return generateWave(100, duration);

}

function generateBassNote() {

const bassNoteFrequency = 130.81;

const duration = Math.random() * (2 - 0.5) + 0.5;

return generateWave(bassNoteFrequency, duration);

}

function generateWave(frequency, duration) {

const audioContext = new (window.AudioContext || window.webkitAudioContext)();

const sampleRate = audioContext.sampleRate;

const numSamples = duration * sampleRate;

const waveData = audioContext.createBuffer(1, numSamples, sampleRate);

const waveDataArray = waveData.getChannelData(0);

const twoPiF = 2 * Math.PI * frequency / sampleRate;

for (let i = 0; i < numSamples; i++) {

waveDataArray[i] = Math.sin(i * twoPiF);

}

const waveDataBuffer = audioContext.createBufferSource();

waveDataBuffer.buffer = waveData;

waveDataBuffer.connect(audioContext.destination);

waveDataBuffer.start();

return exportWaveData(waveDataArray);

}

function exportWaveData(waveDataArray) {

const waveDataFloat32 = new Float32Array(waveDataArray);

const waveDataByteArray = new Uint8Array(waveDataFloat32.buffer);

let output = "";

for (let i = 0; i < waveDataByteArray.length; i++) {

output += String.fromCharCode(waveDataByteArray[i]);

}

return btoa(output);

}

Sure, it didn't provide the drum and bass it was trying to get at, but that entire code was essentially created in seconds, from a prompt that most people are capable of creating. If you have access to artificial intelligence technology like ChatGPT, you can create very decent code, even if you don't have any technical understanding of software. Software engineers who incorporate artificial intelligence technologies into their practices will be far ahead of those who do not.

Listening to the sound leaves some things to be desired, but more desirable sound is capable of being created if the limits of ChatGPT are lifted.

Similar prompts were written to have ChatGPT create p5.js code for the visuals and animation.

Sometimes ChatGPT would return code that did not run. This code was either discarded, or fed back to ChatGPT and asked for working code. ChatGPT can take code that does not work, along with a prompt like "Please take this codebase and make sure the following error does not occur: __________" and it can fix the error and give you code that works.

Some of the visuals and animations that were generated were not interesting. So when using ai technologies at the moment in your creative pipeline, you will have to filter through what results you find to be interesting.

ChatGPT is capable of writing long and complex codebases, but this feature is not provided to the majority of users. This raises the age old questions of who controls the technology, and who is allowed to use it.

It would be wild to have ChatGPT return say a 10,000 line or a million line codebase, and while that seems technically possible, it is not allowed. Most code returned was around 100 lines, and it told me to finish creating the artwork myself, or provided me with individual steps that I needed to complete. While I understand the need to keep resource utilization low, it is important for companies like OpenAI to make their technologies available for all people who are working with good intentions.

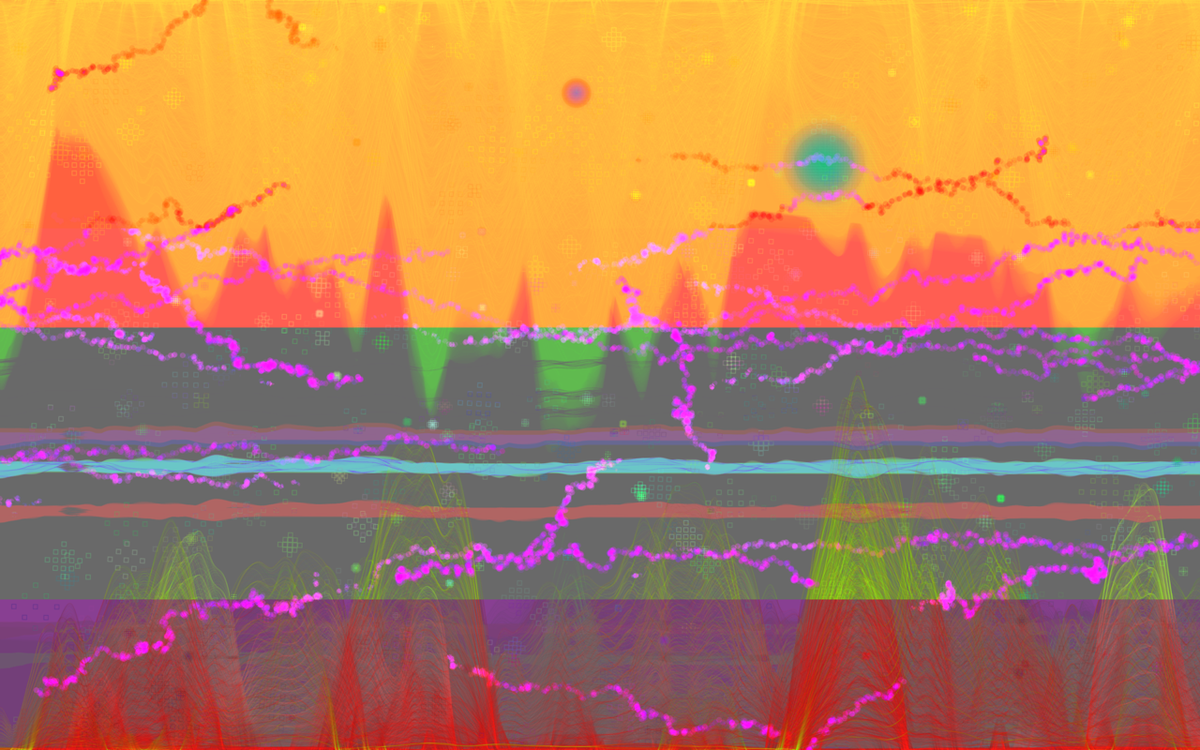

Out of several days of prompting, I ended up with several codebases that I incorporated into the final codebase for this artwork. ChatGPT provided code for the background. It provided code for the waves at the center of the screen. It provided code for the waves that run along the top of the screen. I asked it to take that code and rewrite it to allow for waves along the bottom of the screen, and it took the code and my prompt and returned code for the waves that run along the bottom of the screen. It provided code for the sun and the moon elements. It provided code for the sprites that wind their way through the artwork. It provided code for the stamp that colors the artwork. And it provided code for the sound that is generated.

I took all of the snippets of code that ChatGPT provided, and incorporated it into one final codebase. This manual process probably could have been automated by ChatGPT itself if it allowed for results based on longer codebases.

I added some code to bring the randomization in alignment with what I felt would make for interesting generative art. I added code to be able to have control over the colors that were used, but for the most part, AI wrote this code, and a human was in the loop to provide some basic integration and mild creative input.

This code took about five days to write, so AI has shown to be very fast in iterating on creative concepts, and producing high end code. Some of the animations of this project are better than what I would have come up with had I written this code from scratch. Some of the great power of AI systems are in their ability to give you unexpected outputs. This may mean you need to be clearer in your prompting, but in general these unexpected outcomes are wonderful for the creative space. Unexpected outcomes in more serious systems can of course be very dangerous, so it is something to consider when using these technologies in systems where the fault tolerances are tighter.

There are a couple notes on this project. First, the preview is intended to be a darker, less saturated, greyed out version of the running project. This artistic decision was made as a rejection that the preview is the most important part of the artwork.

One of the advantages of digital art is that it is capable of movement and sound in a way that more traditional mediums are not. By clicking play on this artwork and experiencing it as intended, you will be rewarded with a vivid world of sound and color.

A second note is that while the visuals are deterministic, the sound is not. While the animations were more liberally adjusted to allow for determinism, the code for the sound is pasted almost exactly as it was output from ChatGPT. It is more important to me to preserve the code that ChatGPT created, to provide an example of how AI can create sound. I have thought about enhancing this sound with a variety of other instruments, randomization, notes, musical concepts, but I felt that would take away from the importance of the work.

The decision for fxhash 2.0 to add Ethereum as a cryptocurrency is a wonderful option for artists exploring sustainable financial models for their work, as well as collectors who are interested in collecting work on that chain. I thank everyone involved for their work in helping to build the future of art, and look forward to all of the innovations to come from this dedication over the years. The digital art space that was ignored for so long, is finally being celebrated.

Thank you to the collectors who are helping to fund the wild and newfangled work that we are so lucky to be able to enjoy.

You can explore g-scapes here: https://www.fxhash.xyz/generative/slug/g-scapes

The present is cross-chain.

Enjoy your life.

Technologies used: HTML, HTML Audio, CSS, JavaScript, p5.js, ChatGPT